NOTE: Chris Hill wrote a very detailed post on Reddit with more information on ReFS with integrity streams, and also created a pretty impressive automated PowerShell script. His results are very concerning, and I’d advise to not trust ReFS until further notice. I will be redoing my testing and will update the post once we get to the bottom of this. See his comment below, or the Reddit thread in question: https://www.reddit.com/r/DataHoarder/comments/scdclm/testing_refs_data_integrity_streams_corrupt_data/

Rationale

The year is 2022, and if you are serious about storing your data, you likely have an impressive NAS setup with ZFS, ECC Ram, etc. I have gone down the ZFS rabbit hole, but I’m still running a gigabit network, and adding a NAS or server to my setup will require me to upgrade to at least 2.5G, otherwise I’m quite performance limited by the network.

In the past, I have explored the option of using ReFS with integrity streams instead, as a ZFS-like replacement, but there are some horror stories out there (check here and here as examples), and I dropped the idea.

With the release of Windows 11, I’m planning on upgrading my desktop so I can run nested virtualization on my AMD Ryzen, and decided to revisit this topic. Can ReFS be trusted with the latest Windows release? Let’s find out!

Setup

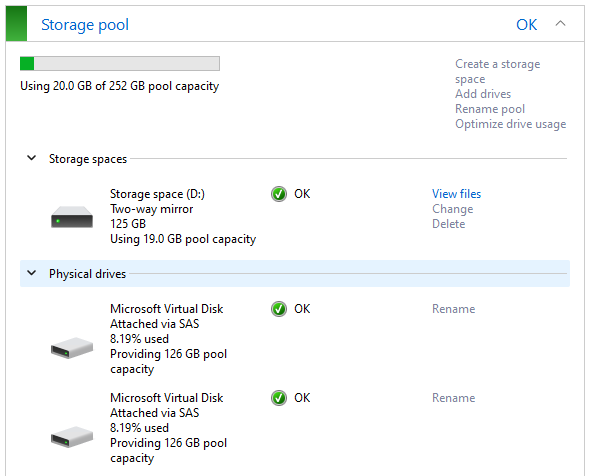

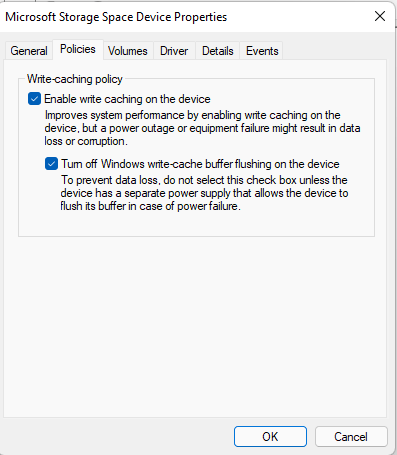

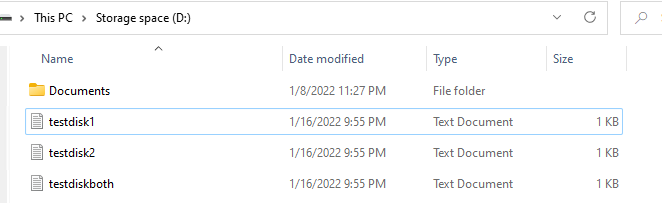

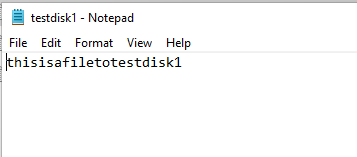

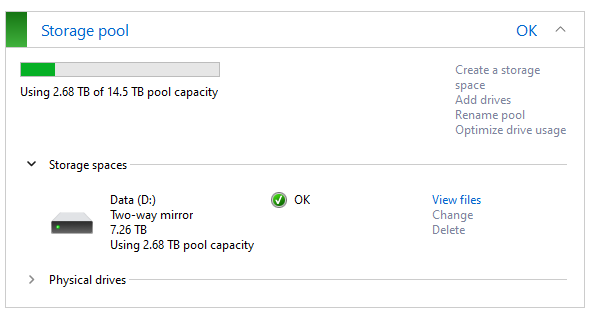

For the purpose of this test, I will keep things simple. I’m going to run a Windows 11 Pro for Workspaces VM, with 2 additional disks attached. These disks will be setup to run a mirror pair in storage spaces, with ReFS and Integrity Streams enabled. In order to simulate issues, I will create 3 text files, and will do the following:

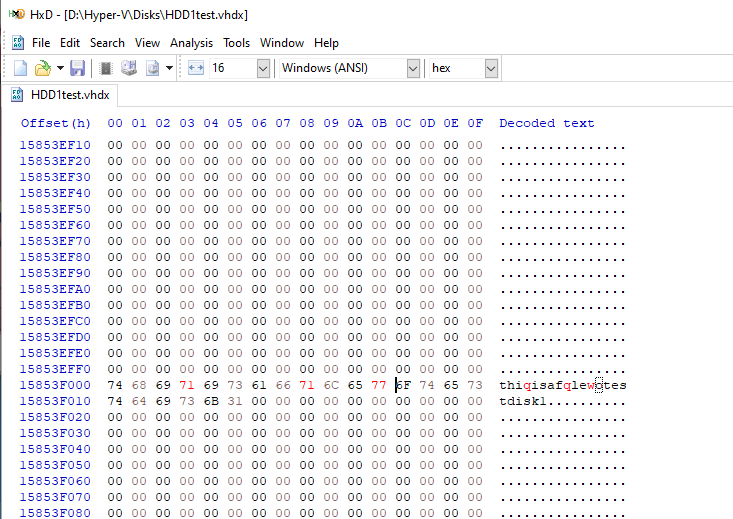

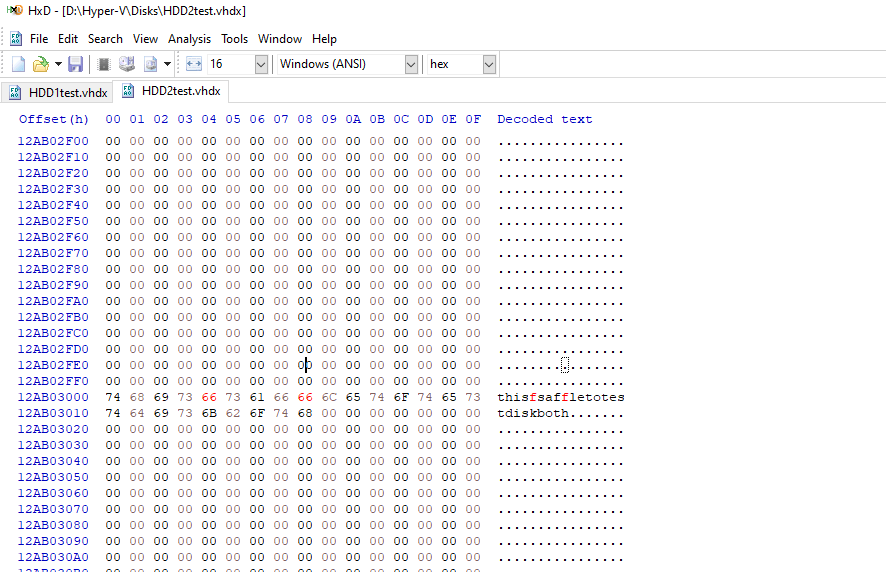

- Scenario A: Flip some bits on the first file on the disk 1

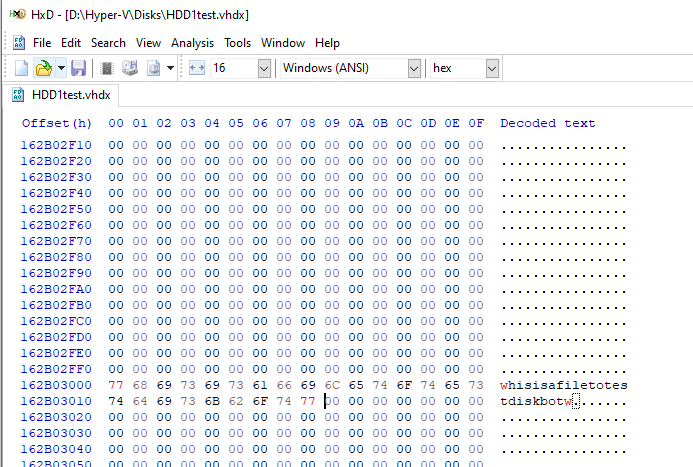

- Scenario B: Flip some bits on the second file on the disk 2

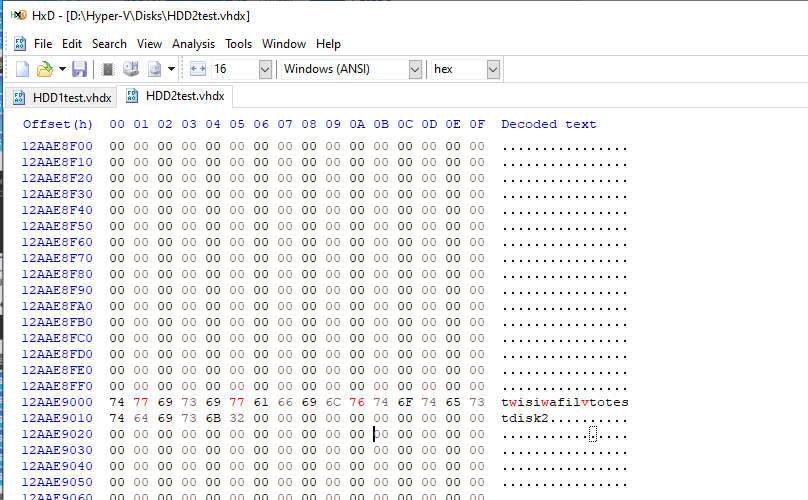

- Scenario C: Flip some bits on the third file on both disks

From Microsoft’s documentation (you can read it here), the expectation is that ReFS will be able to correct Scenario A and B, but will fail on Scenario C. I will be using the default cluster size of 4K as recommended here.

Results

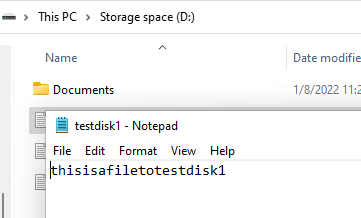

On the first test, I try to open the file 1, and it opens fine, with no signs of corruption.

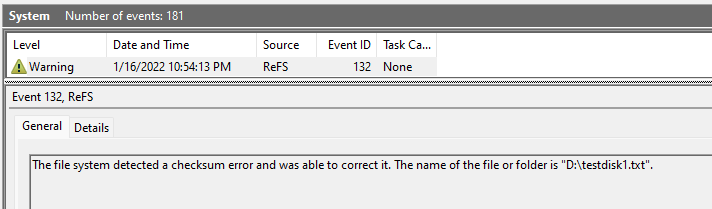

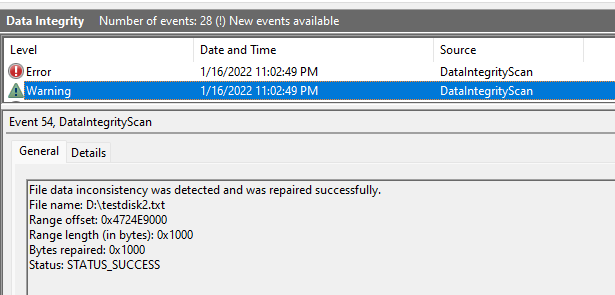

Checking the event viewer, we can see that ReFS was able to automatically fix the file for us. Yay!

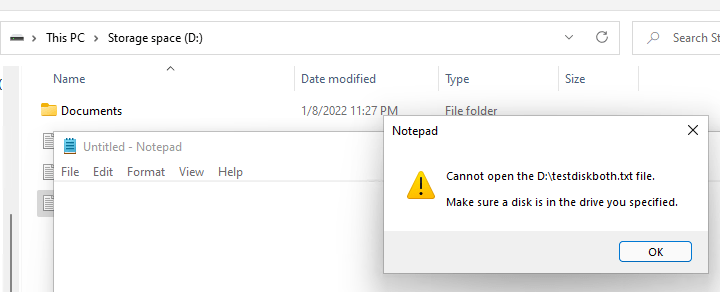

Now, if we try to open the file we broke on both disks, here is what we get.

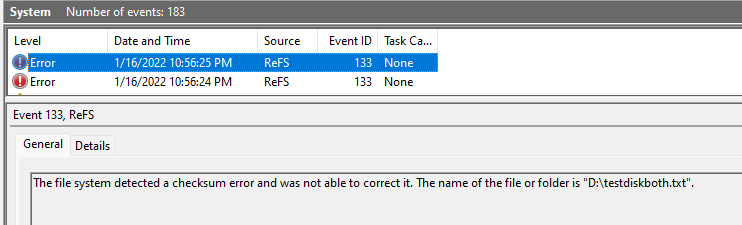

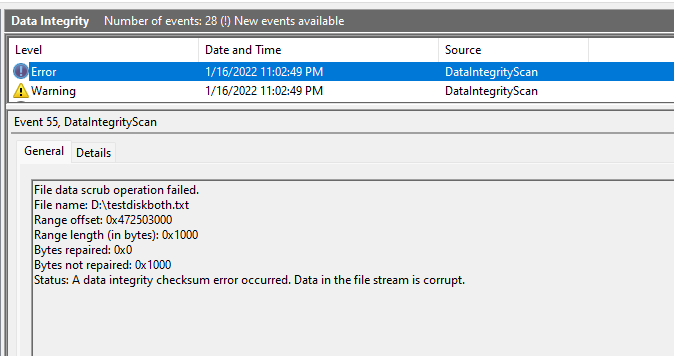

And the corresponding two events (I’m assuming one for each disk that failed checksum).

Note that ReFS did not delete the file (as experienced by other people using parity disks, mentioned earlier in this post. I’m not sure if this is a new behavior or just my luck).

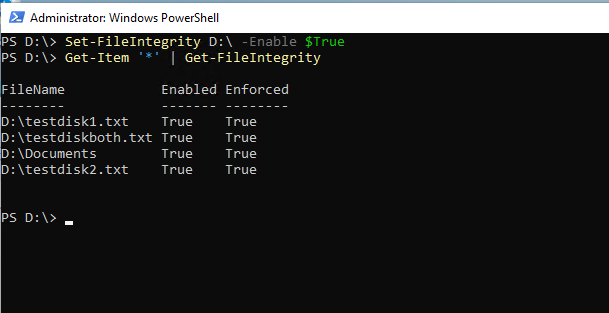

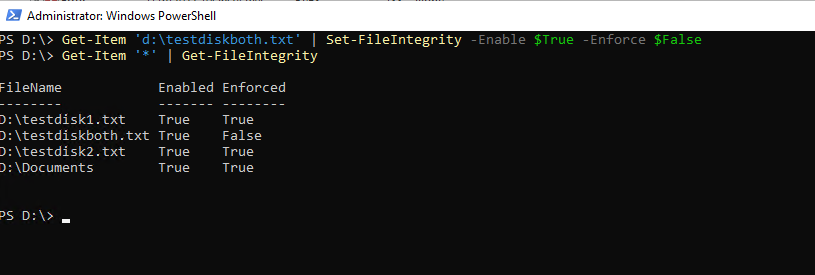

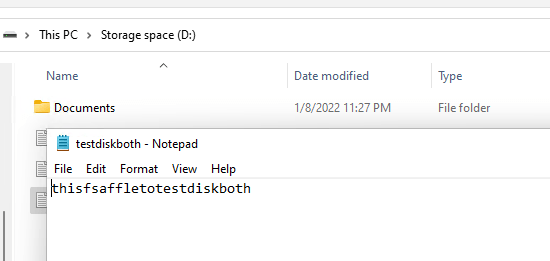

Interestingly enough, since the file did not get removed, I can actually unblock it and still access it by setting the enforce flag to False (you might not want to lose all the content, after all).

As you can see above, the file loads but the corrupted bits remain 🙁

Bonus Content: Enabling Data Scrubbing

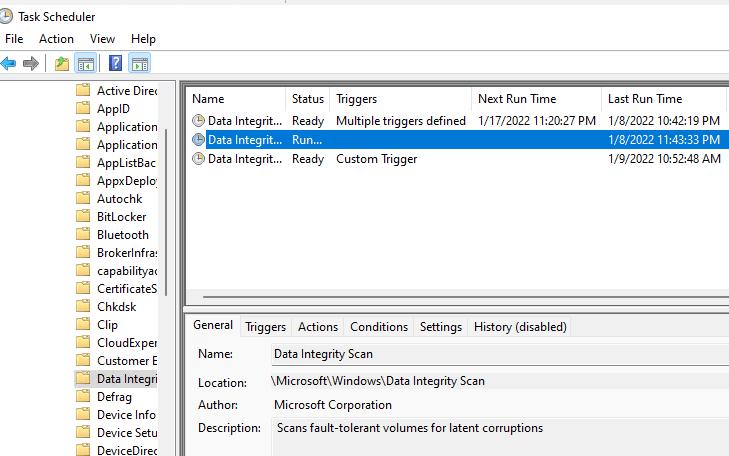

ZFS has a scrubber to help prevent a phenomenon called bit rot. Interestingly enough, ReFS has the equivalent Data Integrity verification task, but it comes disabled by default. You can go to Task Scheduler -> Microsoft -> Windows -> Data Integrity, and pick the 2nd task from the list. Set a weekly schedule and you should be good to go.

Just to try it out and see if it works, I ran it manually, and sure enough, it found the inconsistency on the disk2 file 2 (which I had not opened and triggered the ReFS fix yet), and also the file I corrupted on both disks.

Pretty cool uh?

Long Term Testing

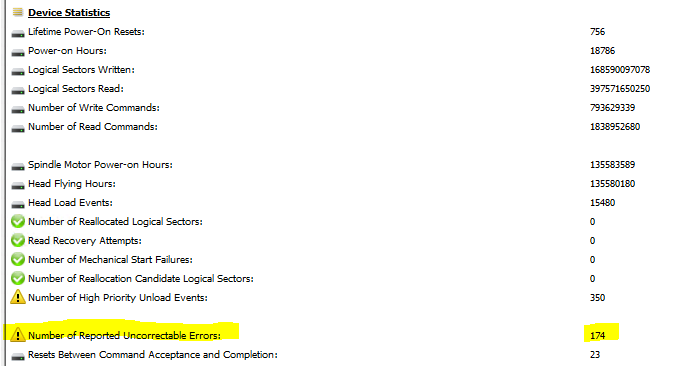

As much as synthetic tests can provide some insight on how the underlying technology operates, I want to put ReFS to test with some real data. I have 2 Seagate SMR disks (if you don’t know about SMR, it’s not a great disk for constant write operations), with one of these disks already failing (174 uncorrected errors reported by S.M.A.R.T.). I will run a few Hyper-V workloads on these disks, with Integrity Streams also enabled. Stay tuned!

Final Thoughts

Although this test is far from claiming that ReFS can be trusted to secure your data, it does indicate the filesystem is operating with automatic error correction in place when you enable integrity streams on a mirrored storage spaces. Perhaps another test to run is against a parity volume, but the write performance on those with Storage Spaces tends to be horrendous, but if you decide to do so, feel free to comment and post some results!

Ultimately, a decent backup strategy is needed with any file system you choose, but ReFS can help in those scenarios where there is silent data corruption happening. And of course, if you are truly worried about corruption at all levels, don’t forget to also pair up some ECC memory on this setup.

Leave a Reply